***This article originally appeared in the February ’23 issue of Animation Magazine (No. 327)***

Higx Point Render for Nuke

Everyone loves smokey, tendrilled and wispy effects. I don’t know why, but there’s just nothing like a gorgeous nebula in space, or how ink moves when dropped in water, or the way Wanda conjures up wiggly-woo magic in Marvel movies. When these are created in CG, it’s usually made in a 3D program with particles, a bunch of wacky forces and, for those beautiful tendrils, a simulation of a gazillion particles.

Back in my day, you had to export out passes with slightly different parameters and then comp them back together because our workstations couldn’t handle the particle count. But now, there is a blazingly fast plug-in for Nuke called Point Render, developed by Higx (Mads Hagbarth), which allows these cool effects within the compositor using Nuke’s BlinkScript in the 3D work area. The speed comes from it being a point renderer (hence the name), which means its handling a point per pixel, rather than actual particles. And the points are additive, meaning that if points are on top of each other they will get brighter. There is no occlusion because there is no lighting. This is the signature for Higx Point Render: cool, energy-like forms that swirl and undulate.

The basic workflow is pretty straightforward: You set a point generator, modulate and modify with nodes to add fractal noise, twists or custom expressions, and then render with the Point Render node using a camera. But it’s the layering of these that give you the beautiful complexity of the imagery. Because you can use cameras, the points live within the 3D space of the shot — so it tracks with all your other elements. In addition, the render supports motion blur and depth of field.

Point Render uses only Nuke native nodes, so it will keep working as Nuke gets upgrades. What’s even cooler is that the nodes are open source. The BlinkScript in the nodes is accessible, so you can poke around under the hood to find out how it works. And you can even customize the code for your own purposes.

To summarize, it’s powerful, fast and at $157, it’s well worth the price. Not only you can have it in your toolkit, it will also support the talented folks who are out there making cool tools for you.

Website: higx.net/point_render

Price: $157

Adobe Creative Cloud 2023

Adobe recently announced its plans for Creative Cloud for the fall, which should be released out in the world by the time this article hits the newsstands. This next generation CC features 18 upgraded desktop applications, including Photoshop, Illustrator, InDesign, InCopy, Animate, XD, Dreamweaver, Premiere Rush, Premiere Pro, After Effects, Audition, Character Animator, Media Encoder, Prelude, Bridge, Camera Raw, Lightroom and Lightroom Classic.

There are a lot of advances in most of the apps, but there is also a bunch of cross-pollination. So for this article, I’m going to focus on Premiere Pro, because it reaches into the new tools for After Effects and Audition as well. There aren’t any huge updates to the actual “editing” part of Premiere Pro, but there are some sweet additions to the supplemental things that make our projects look and sound good.

A primary upgrade is a set of tools that will change your whole color workflow. It’s called Selective Color Grading. Those familiar with Nuke will know this as a HueShift. Basically, it lays out your color wheel into a horizontal line. You then add control points to isolate a specific hue you want to modify or select. The resulting curve can be as soft or hard as you wish depending on how specific the hue is you wish to isolate. This can be used to adjust luma or shift hue or what I have been using for quite a bit — despilling (which allows you to specify a color from the image and separate from the alpha bias). It is extremely powerful, and once you wrap your head around it, there won’t be a day that goes by in which you won’t crack it open.

After Effects has more powerful motion graphics templates that can be ported over to Premiere Pro. This means the motion graphics artist can design the titles or branding for a spot or TV show — and then send it over to the editor. Parameters can be promoted to only reveal the components that will be changing. This way, editors can update credits, lower-thirds text, etc., but use the base animation from the artist. This is a huge timesaver to allow the text change to happen in editorial — keeping the artist free to make new, super cool graphics for the next show.

As an extension of the motion graphics tool, Premiere Pro can accept data from a CSV spreadsheet, and then utilize the data therein to automagically create infographics. This also saves a lot of time, allowing the data to drive the animation.

But my absolute favorite new tools lie in the audio. Using Sensei, Adobe’s AI/machine learning algorithms, Premiere Pro and Audition have intelligent denoising and de-reverbing tools. Analysis of your audio (and presumably numerous other audio samples) allows the Sensei to learn what is sound and what is undesirable noise. It can then separate them out. The same holds for the de-reverb. After learning, Sensei detects the additional echoes (the reverberation) of the primary sound and filters them out. I can think of at least 20 things that I need this for, and that’s just off the top of my head!

Website: adobe.com/creativecloud

Price: All Apps, $82.49/mo. (monthly), $54.99/mo. (yearly), $599.88/yr. (yearly upfront); Premier Pro, $20.99/mo.

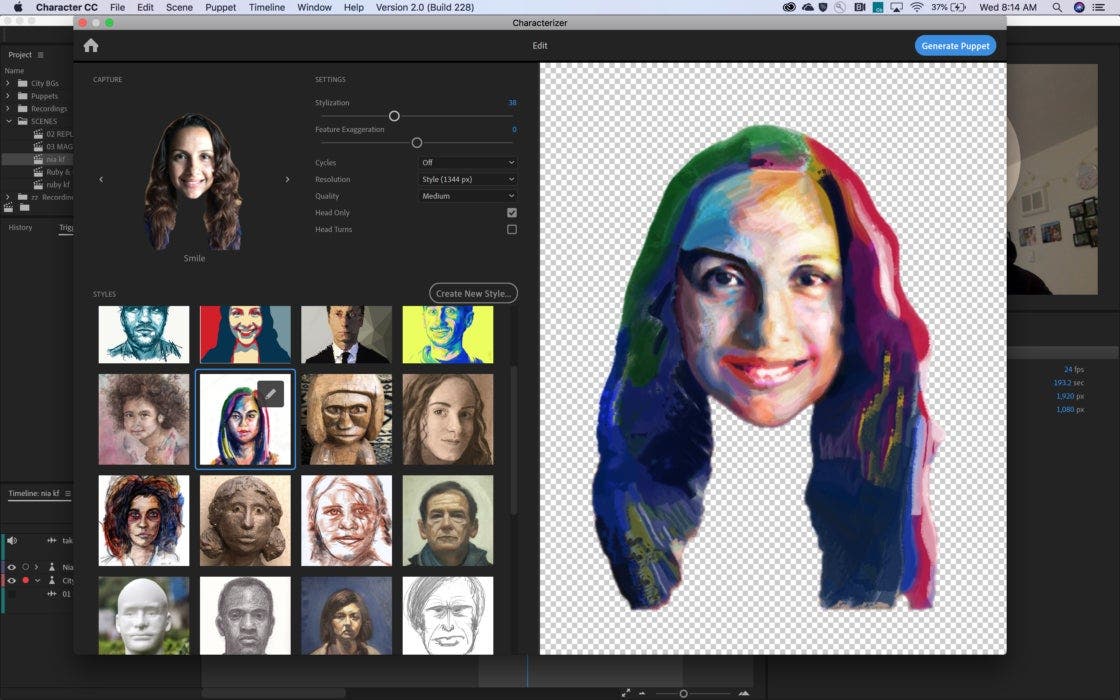

Adobe Characterizer

A few months ago, Characterizer was also announced as part of the software company’s Character Animator CC release. In its first inception, I wasn’t bowled over by Character Animator. I felt it was an easy way to make things move, which basically opened up the ability to people who had no experience doing it in the first place. This leads to horrible animation which results in judgments against the tools rather than the talent — from people like me, for instance. However, I also knew that when it got into the hands of capable animators, we would start to see some ingenious stuff. The animated segments we saw on The Colbert Show and Tooning Out the News are perfect examples of how the technology can be used in live situations.

Feedback from these actual production situations helps Adobe focus on further development of their tools. Character Animator seemed to have started as a quaint idea rather than a fully-formed development plan, but it is driven by the feedback of the users to create a better product. This is a textbook example of how app development should work: Release frequently, fail quickly, pivot and rerelease.

I see this in Characterizer as well, which seems to have started as a seed of an idea that doesn’t know the strength of its own potential. Characterizer is able to take samples of your face from your webcam, analyze the poses and, via Sensei, learns what pieces of your face are what. You can then apply different artistic styles to your visage. This is not limited to “pencil sketch” or “charcoal” or “oil painting” — you can use photos of objects that have a color palette that will be transferred to you. However, the process of creation doesn’t entirely need to be automated. There are tools to define areas of the sample image to apply to your features.

Once you have something you like, the result can be tied into Character Animator rigs which are able to be driven by your own performance through your webcam or other cameras. Now you have a live performance that could be a pen and ink drawing … or a wall of bricks. It’s absolutely fascinating.

The results currently range from artsy to terrifying, but not necessarily artistic. This is because we are very early in the evolution of Characterizer. Sensei is learning and continues to learn how people’s faces work and how to apply the looks to them. The more it learns, the more refined the results will become. And the more refined the results, the more users will be attracted to using it. That, of course, means Sensei will gain even better knowledge and artistic expertise. Some have predicted major advances to happen with Characterizer in the next five years. I have a feeling those people are underestimating the power of machine learning.

Website: adobe.com

Price: Included in Adobe CC suite, see above.

Todd Sheridan Perry is an award-winning VFX supervisor and digital artist whose credits include Black Panther, Avengers: Age of Ultron, The Christmas Chronicles and Three Busy Debras. You can reach him at todd@teaspoonvfx.com.

Win a Funko X Lilo & Stitch Prize Pack!

Win a Funko X Lilo & Stitch Prize Pack!